Large-scale multimodal machine learning for public health

The COVID-19 pandemic underscored the critical need for robust infectious disease modeling to inform public health decision-making. However, existing models often struggle to capture the complex interplay of factors like viral evolution, policy interventions, and human behavior. To address these challenges, my research focuses on developing advanced machine learning frameworks that integrate large-scale multimodal data for more accurate and actionable public health responses.

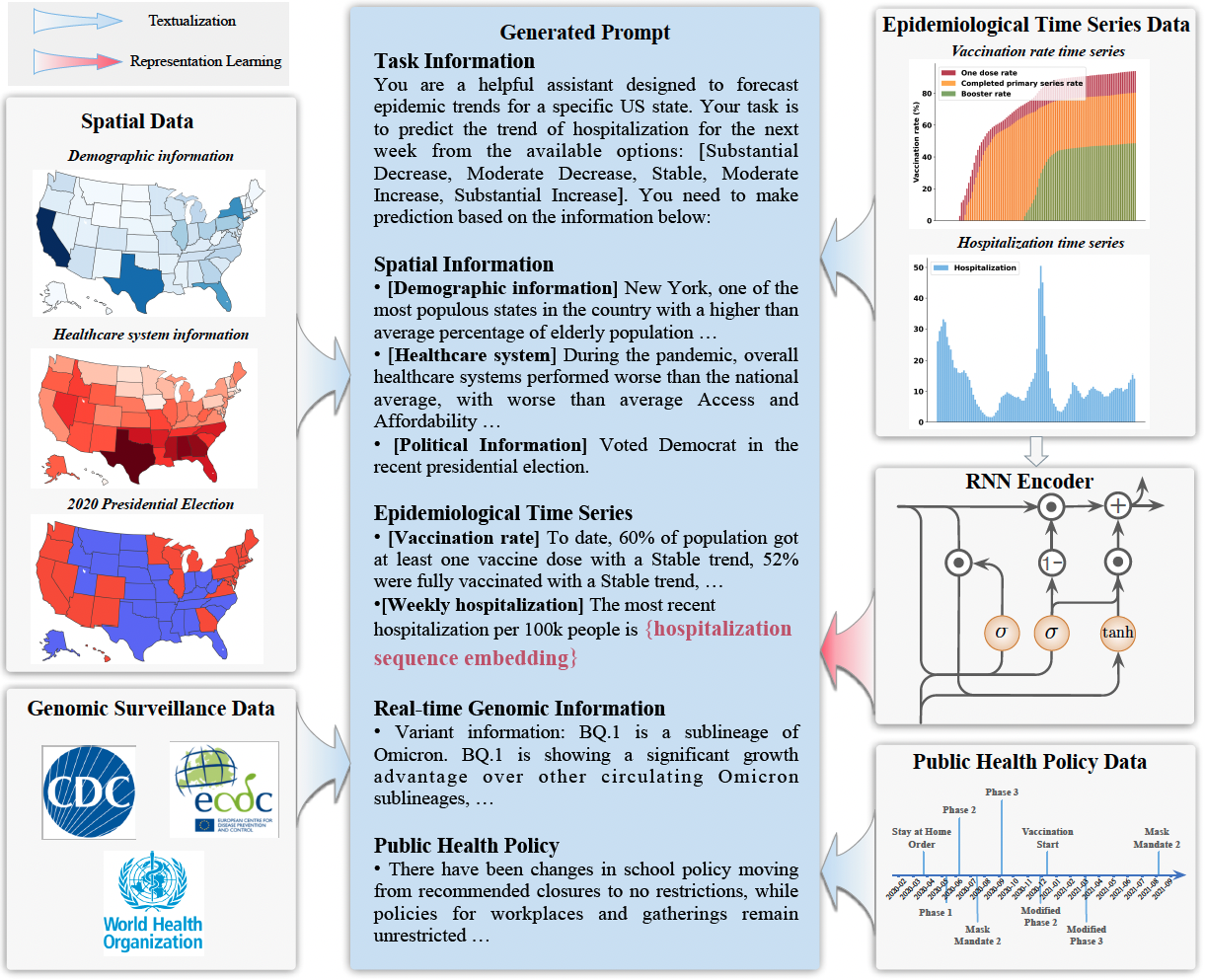

One key example of this research direction is PandemicLLM, a framework designed to leverage large language models (LLMs) for infectious disease forecasting at the population level. PandemicLLM incorporates an AI-human cooperative prompt design system, coupled with representation learning, to convert diverse input data into textual formats suitable for LLM learning.

The pandemicLLM allows LLMs to effectively learn from time series data by encoding them into meaningful representations using a customized RNN-based encoder. It also effectively integrates unstructured disease-related data streams (e.g., public health policies and genomic surveillance reports) that have not been previously incorporated into disease forecasting models.